As part of the 6.861 NLP class "Quantitative Methods for Natural Language Processing", we created our own research project. We investigate MT (machine translation), as all of us had a strong linguistics background and knew at least 5 languages. Initially, we sought to analyze current MT abilities of SoTA (state of the art) systems, like DeepL and GT (Google Translate); then, based on deficiencies found, we create our own model to remedy them. As such, our research would include "analysis" and "invent-something-new" components.

The key to an insightful project would be to take into account language families. As MT systems fail to incorporate syntactical, contextual, and morphological features of languages, we test MT with respect to language families. That is, we assess whether intra-language family translations (e.g. Spanish → French) perform better than inter-language family translations (e.g. Spanish → Chinese).

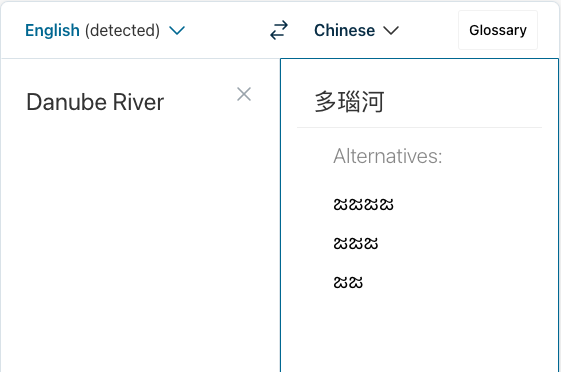

After consulting with Prof. Yoon Kim over our idea, we made a few refinements. Our project would focus exclusively on analysis of existing systems, DeepL and GT, and we would include a golden sparkle with pivot languages. Pivots (P) are "middle-man" languages used to help improve translation of A → B by allowing "A → B" to be done "A → P, then P → B". Generally, P is more studied than A and B, and the best example of a pivot language is English. GT is well-known to use pivoting; DeepL, not.

Our initial proposal is attached below. Note that, with respect to the current implementation, it is mildly out-of-date, and it will be updated. To see further progress for this project, like our methodology, results, and potential further research, click here.